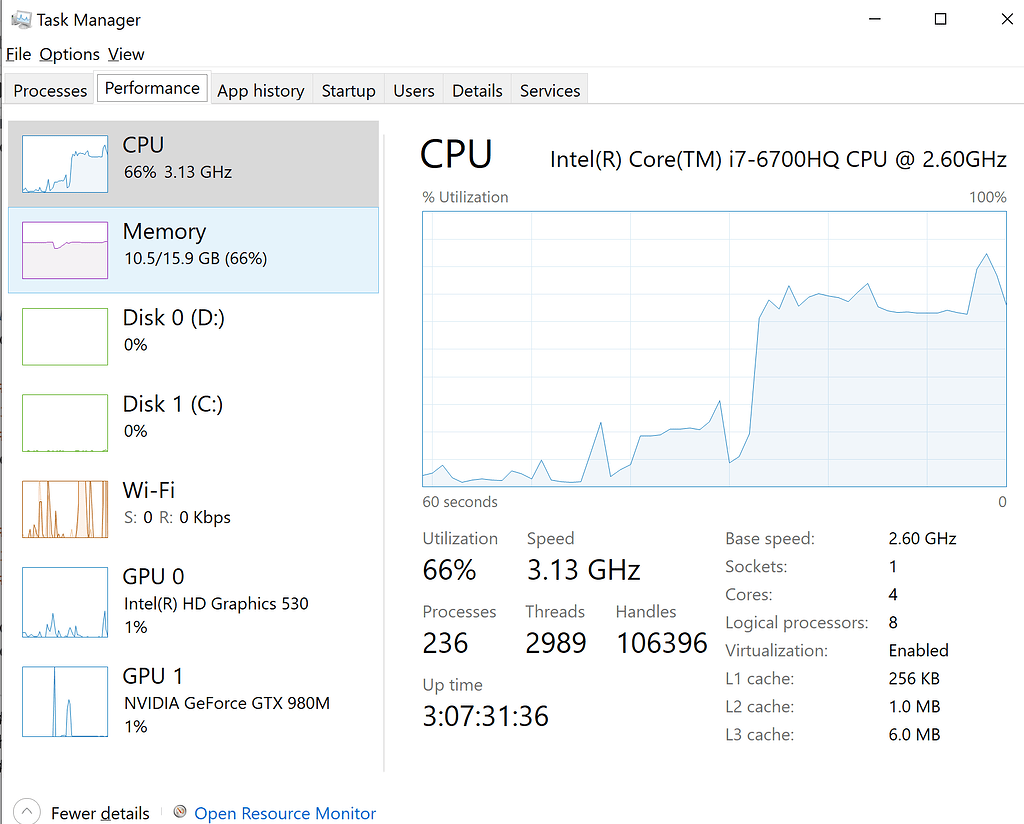

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

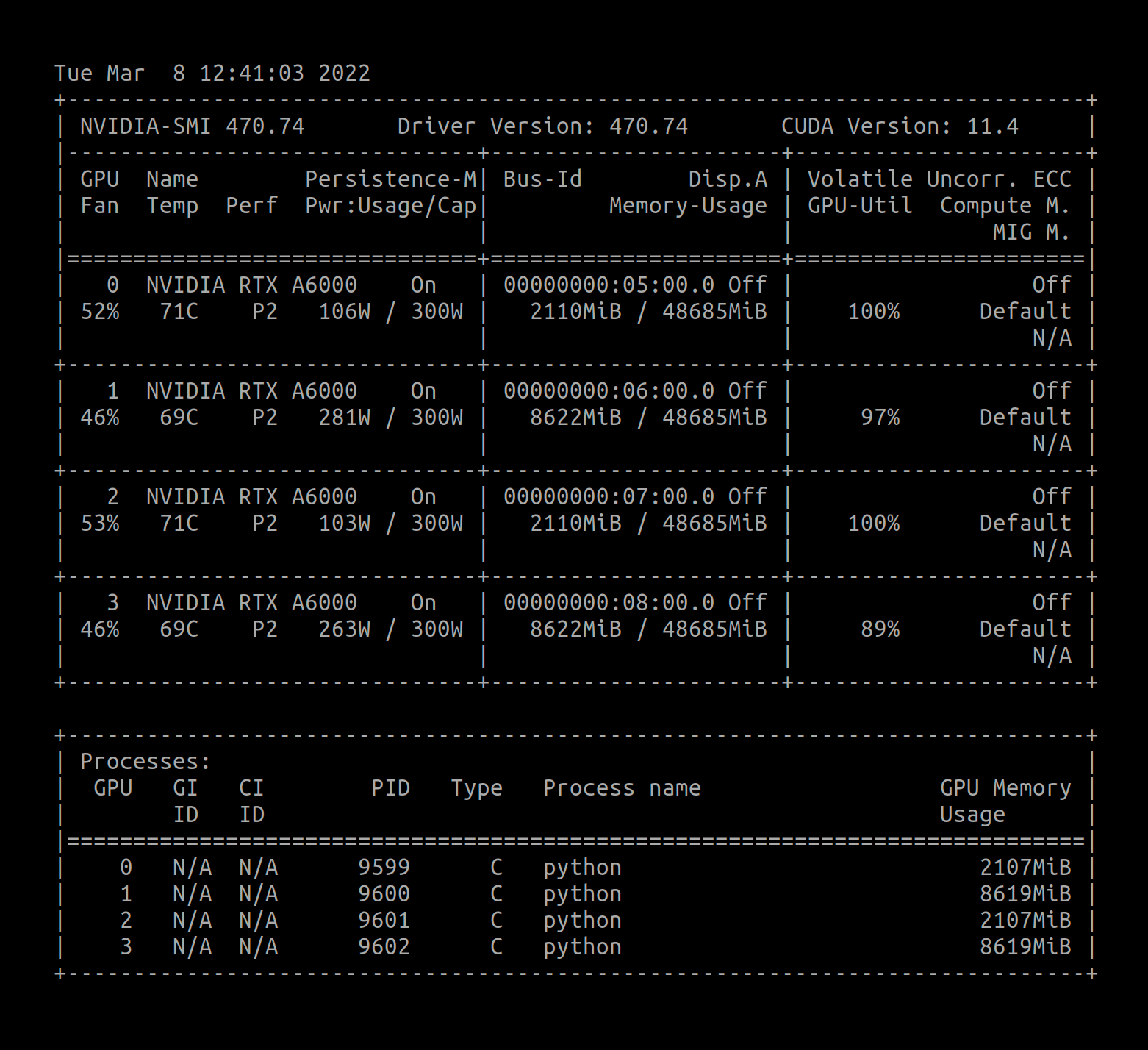

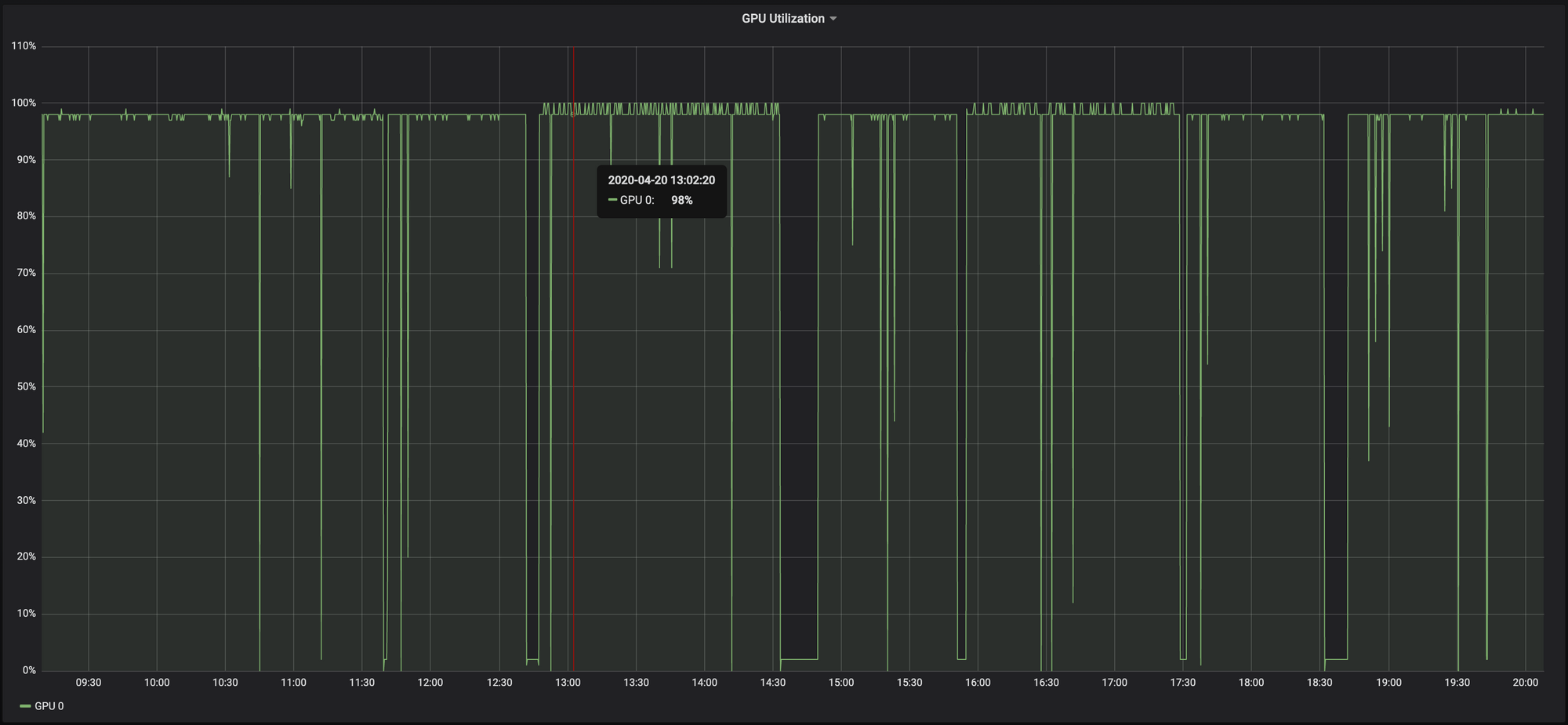

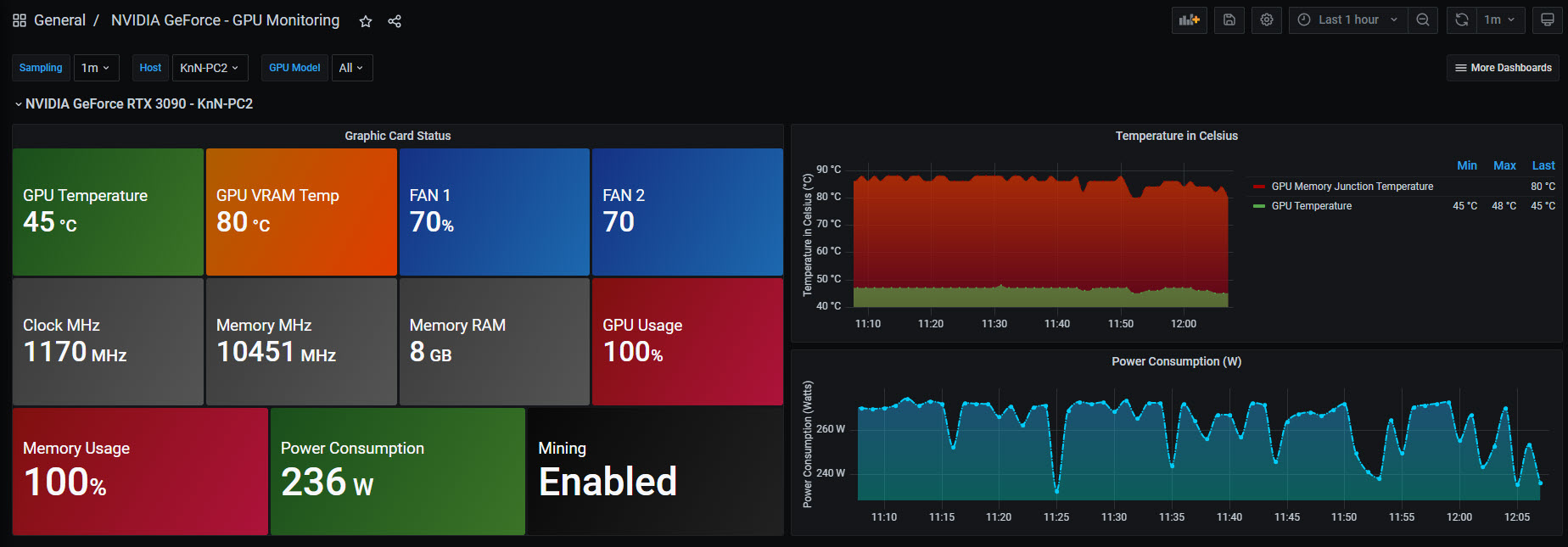

Looking for the Perfect Dashboard: InfluxDB, Telegraf and Grafana - Part XXXV (GPU Monitoring) - The Blog of Jorge de la Cruz

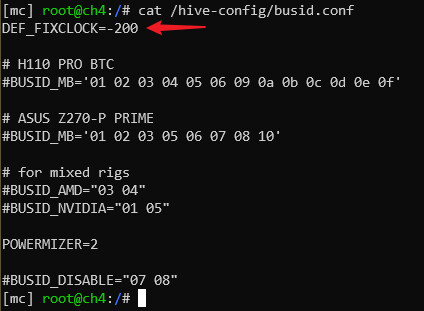

Locked core clock speed is much better than power-limit, why is not included by default? - Nvidia Cards - Forum and Knowledge Base A place where you can find answers to your

Locked core clock speed is much better than power-limit, why is not included by default? - #80 by DediZones - Nvidia Cards - Forum and Knowledge Base A place where you can

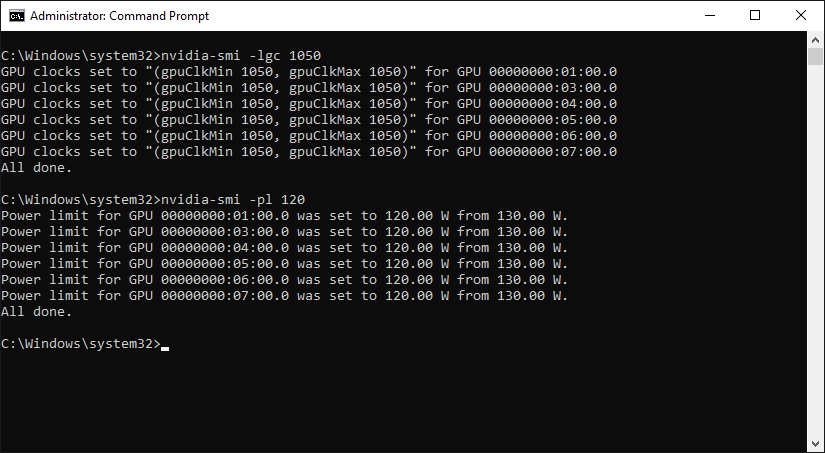

One weird trick to get a Maxwell v2 GPU to reach its max memory clock ! - CUDA Programming and Performance - NVIDIA Developer Forums

![381.xx] [BUG] nvidia-settings incorrectly reports GPU clock speed - Linux - NVIDIA Developer Forums 381.xx] [BUG] nvidia-settings incorrectly reports GPU clock speed - Linux - NVIDIA Developer Forums](https://global.discourse-cdn.com/nvidia/original/2X/5/5e411dd76fecd1b919dfa63b81f31889b483a5bd.png)